This free Windows based tools allows you to quickly locate large files on your harddisk, view what folders are the largest etc. It can be used to free up additional harddisk space. It works on all NTFS based file systems by directly accessing the Master File Table (MFT) of the NTFS. This gives a huge performance boost over the standard File access methods.

Main features

- Fast performance, analyze hard disks in seconds!

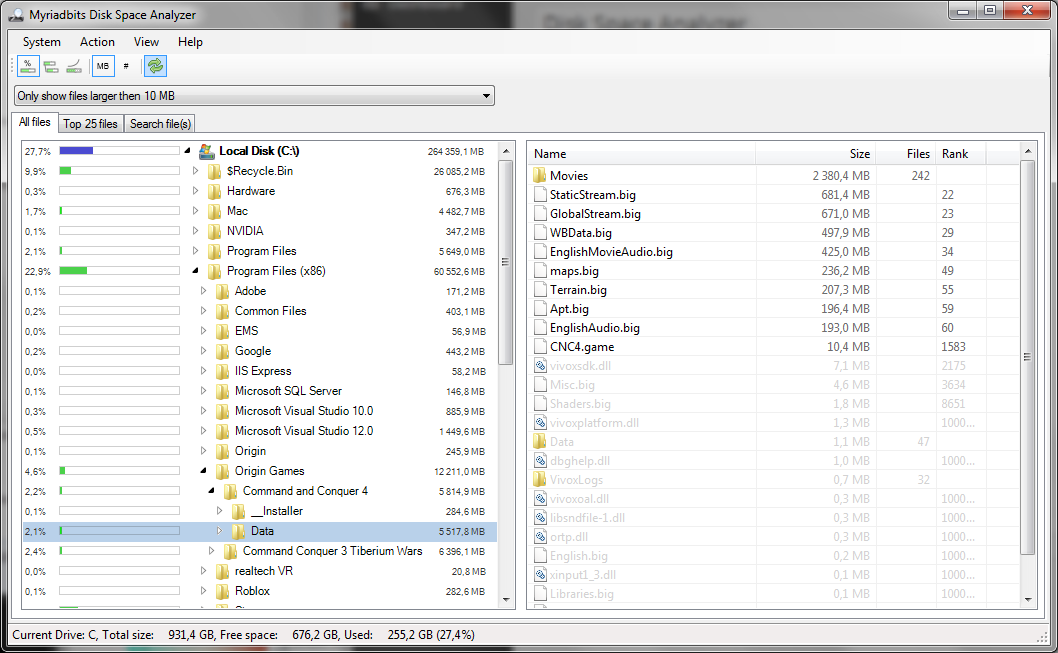

- Shows disk usage per directory/folder in percentages, absolute, relative to the parent and logarithmic. Graphically displayed using colored bars.

- Filter large folders and files.

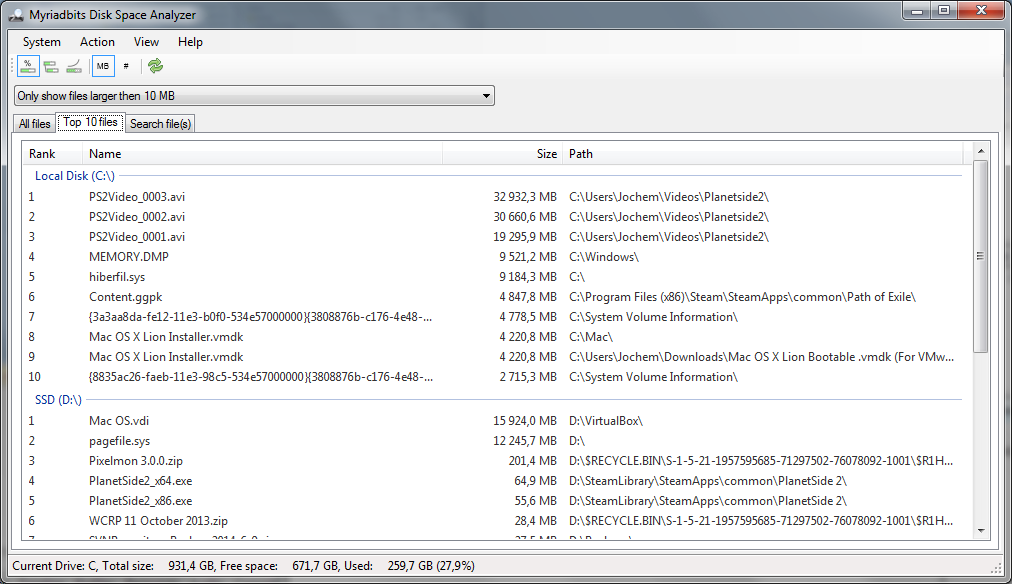

- View a list of X largest files per harddisk and their path (10 to 2500).

- Context menu’s to quickly open Windows Explorer, open a command prompt on any directory, request properties and open files.

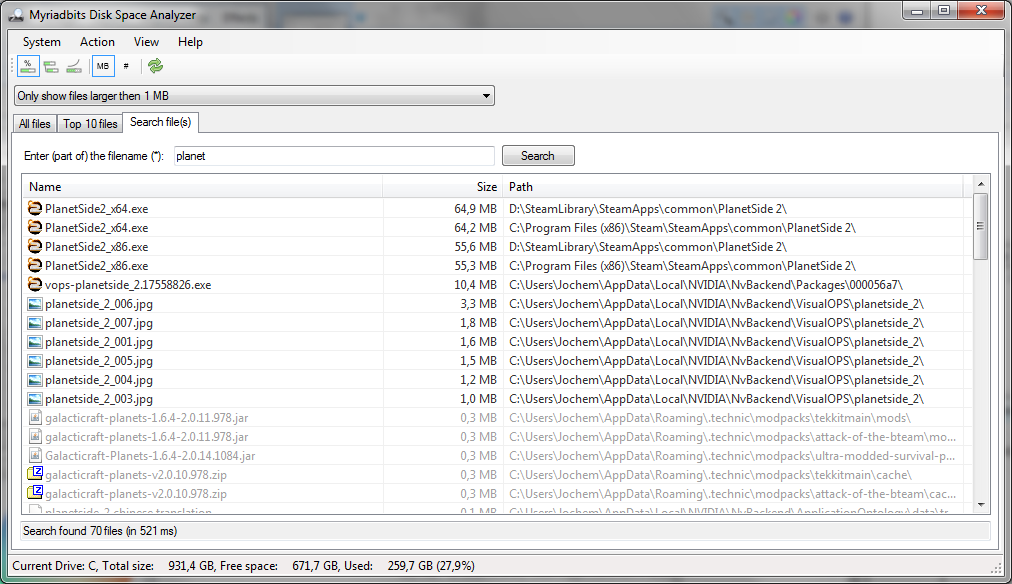

- Fast search utility to find any file on your harddisk(s) (on my own harddisks within a second!).

- All tables can be sorted by filenames, folders, sizes and rankings.

- Settings dialog to customize the application to your liking.

Screen shots

Disk Space Analyzer, Start/scan dialog in action

Installation instructions

Download the latest version here:

It is normal setup installation. Just run the setup and the application will be installed on your hard disk.

Note: you might receive a web browser warning that this file hasn’t been downloaded much, but you can safely ignore that message.

Usage

Just start the application, it will display a list of all NTFS harddisk that can be analyzed. Press the Start button and the file information of all selected hard disks will be read into memory.

Don’t forget that there are context menu’s (right-mouse-click) in the tree and views that give some additional options!

Use the settings screen to modify user settings like size units, colors etc. All user interface selections/filters are remembered.

Some background info

During my work I frequently encountered large (server) disks with huge amount of files on them. When trying to clean-up the data I always grumbled upon the slow Windows tools: Why is Windows Explorer always grinding through all files and sub folders to determine the folder size (the sizes are alreayd known by NTFS…)? Why does the file-indexer consume so much of my precious harddisk read/write cycles when it doesn’t seem to give a performance boost? It should be possible to do this faster!

So I studied the NTFS ‘standard’ on the internet (not much info to find). But from multiple documentation scraps and the (exellent) ‘NTFS Documentation‘ by Richard Russon and Yuval Fledel, I was able to create my own NTFS library.

Since then I wanted to prove (to myself, I know a this sounds a bit sick/nerdiss…) that the performance is not a code language but programming design issue, so I programmed this entire API in C#. Performance can be increased by smart program design. And guess what the API is really fast !

On my own system (harddisk 25% used), it takes under 4.8 seconds to scan the entire NTFS. Wildcard searching the entire harddisk(s) takes < 1 second…

This NTFS API will read sequential through all file NTFS records this in contrast with other tools that start reading the index tables (the ‘directories’) and require random disk reads to find the file information blocks.

The source code is all GPL can be downloaded here:

It is completly written in C#, Visual Studio 2013 (Express Edition). Feel free to examine this code, but be warned: almost no code is completely bug free (not even my code…).

For the critics: Yes, the performance of this API might even be increase by some factors when programmed in C++. But the ease-of-maintenance and the readability will suffer.

30-6-2014, Jochem Bakker